In a previous LinkedIn post about vibe coding gone horribly wrong, I talked about the dangers of just going with the “vibes” and why we should still care about the resulting code. This blog post will focus on a different topic, secure coding when using an AI assistant tool or an application development platform that uses AI generated code behind the scenes.

Secure coding and AI, they are not always best friends. Let’s start by explaining a perhaps lesser-known cyberattack called a ” supply chain attack” attack and how it relates to AI-generated code. It’s a hacker’s wet dream to infiltrate the original source code or libraries used by software applications around the world. The hacker’s mission is to plant some malicious code that can steal data and passwords. The software application is built with the malicious code inside and then shipped by a trusted software distributor. Most likely, this malicious code will remain undetected for a long time, giving the hacker plenty of time to steal your trusted data.

This “supply chain attack” is not new and it requires a lot of skills and time to prepare, but when it succeeds, it’s probably the most powerful cyber attack. Imagine what could happen if a web server or a popular online meeting application used around the world is compromised at its source. In May 2001, the open-source Apache web server, which at the time hosted over 60% of the world’s web sites, nearly fell victim to a supply chain attack. A public server hosting the source code repositories and binary releases of the Apache web server was compromised. Fortunately, this vulnerability was discovered by the open source community in time to prevent the hackers from injecting malicious code.

A more recent example of a supply chain attack happened just a week ago on September 8. About 20 npm packages were compromised that in total are downloaded 2 billion times a week The compromised npm packages were distributed on the trusted npm registry but contained malicious code that would intercept your username and password to steal your crypto currency wallet. The malicious code was obfuscated as well to stay hidden as long as possible. The hacker was able to infiltrate by getting control of a Github account of a trusted open source contributor. It all started with a phishing email to enable MFA (Multi Factor Authentication) that appeared to be legit but was sent from a malicious domain name nmpjs.help that was registered just a couple days before on September 5.

With more and more code generated by an AI agent or a vibe coding platform, is AI generated code still vulnerable to these supply chain attacks?

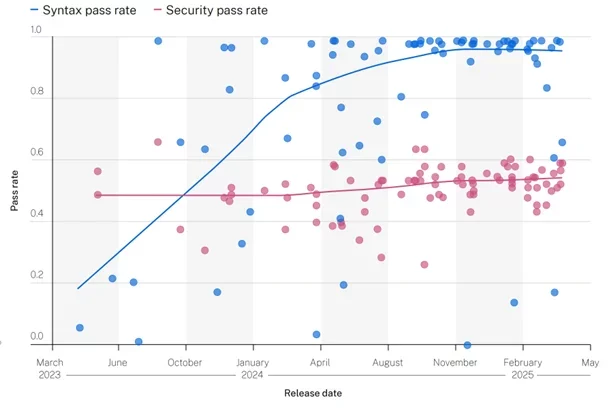

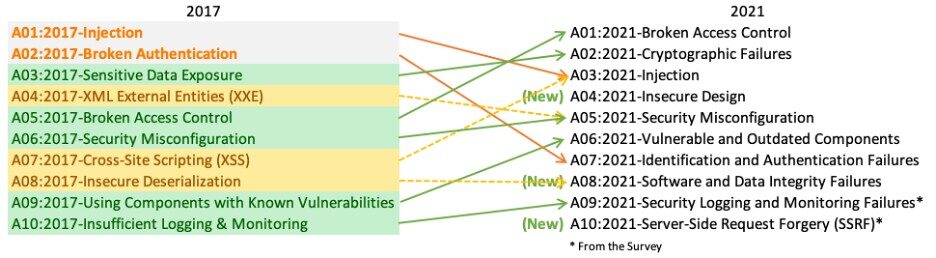

The answer is simply yes. While AI agents are getting better at generating syntactically correct code samples, they are still very bad at generating secure code. A recent GenAI security report shows AI getting almost exponentially better over the years at generating syntactically correct code that actually compiles. However, the graph also shows a flat line for AI code violations against the OWASP Top 10 Security Threats. OWASP Top 10 Security Threats (see red line in the chart). The OWASP Top 10, such as code injection, are easily detected by static code analysis tools such as the SonarQube OWASP plugin.

You may be wondering why an AI agent does not generate secure code from the start?

You need to remember that an AI agent is an inferrer, it’s not something deterministic like a code compiler. This means that an AI agent will choose the most likely next part (or token, if you like) to generate for the programming code. One of the reasons why an agent does not always write secure code is that an AI agent is trained on many public code samples that are freely available in technical articles on the Internet.

These code samples are often used to demonstrate a particular coding technique or programming concept, and are often for demonstration purposes only. They provide some tutorials on how to get started quickly, using less secure things like shared access keys and not implementing fine-grained authorization control. These articles don’t pay attention to secure coding techniques because they want to explain a particular concept. Things like security by design and zero trust architecture (“never trust, always verify”) are left out to focus on the concept at hand.

Still on the same topic of a supply chain attack. As we all know, an AI agent can suffer from hallucinations, often giving very convincing answers that are simply not correct. There’s a new term called “package hallucinations” for AI-generated code. AI-generated code refers to software packages that should be used, but simply don’t exist in the real world.

The large-scale research published in the academic paper “We Have a Package for You! A Comprehensive Analysis of Package Hallucinations by Code Generating LLMs ” tested 576,000 AI-generated code samples. Nearly 20% of them contained links to source code libraries that simply don’t exist. The results varied a bit depending on the programming language used to generate the code. Across different LLMs, the same non-existent code libraries came back in the generated code. As a hacker, you could detect this pattern and publish the software packages that the AI agent said to use.

The difference is that the hallucinated software packages now exist in the real world to which the AI agent is referring. This supply chain attack technique is also known as slopsquatting. Hackers register the recommended “hallucinated packages” on trusted distribution platforms. These software packages contain some malicious code to poison your software application at the source. The problem is that you trusted the generated code too much, but you didn’t verify that the suggested code libraries you were about to download were trustworthy.

Speaking of vibe coding, the vibe coding platform Lovable also had its share of security issues with AI-generated code. About 10% of the deployed web applications scanned for security issues were exposed to hackers accessing data such as personal and financial information.API keys of cloud providers are publicly available. Cloud platform API keys are particularly attractive to hackers because they allow them to run their phishing sites and other malicious applications on a cloud platform for free. Again, it’s a good reminder to always set a budget limit on your cloud provider account.

Many vibe coding users don’t have a technical background and often have little or no knowledge of secure coding. They trust the vibe coding platform to handle the security aspects of the deployed web application, but these platforms still have a long way to go. As Lovable said in a May 29th post op X, “We’re not where we want to be in terms of security, and we’re committed to continuing to improve the security posture for all Lovable users”.

I probably keep repeating myself, an AI agent can be a great tool for a software engineer but make sure you’re still in the driver’s seat. You should care about the code (not just the AI generated or not) and certainly don’t blindly follow the AI agent.

If you’re interested in learning more, check out these online articles on AI and secure coding.

Black Hat: Sloppy AI defenses take cybersecurity back to the 1990s, researchers say

AI code generators are writing vulnerable software nearly half the time by Brian Fagioli on nerds.xyz

npm debug and chalk packages compromised by Charlie Eriksen on aikido.dev

20 Popular npm Packages With 2 Billion Weekly Downloads Compromised in Supply Chain Attack by Ravie Lakshmanan on thehackernews.com

The Rise of Slopsquatting: How AI Hallucinations Are Fueling a New Class of Supply Chain Attacks by Sarah Gooding on socket.dev

The hottest new vibe coding startup may be a sitting duck for hackers by Reed Albergotti on semafor.com

We Have a Package for You! A Comprehensive Analysis of Package Hallucinations by Code Generating LLMs academic article by different authors